Why traditional AAC often fails

Neurotypical children in higher-income families are exposed to roughly 45 million words by age 4 (Hart & Risley, 1995). AAC users in twice-weekly therapy receive approximately 3,000-6,000 models annually based on recommended dosages (Binger & Light, 2007)—a staggering disparity that worsens when clinicians struggle to meet modeling targets in real-world practice.

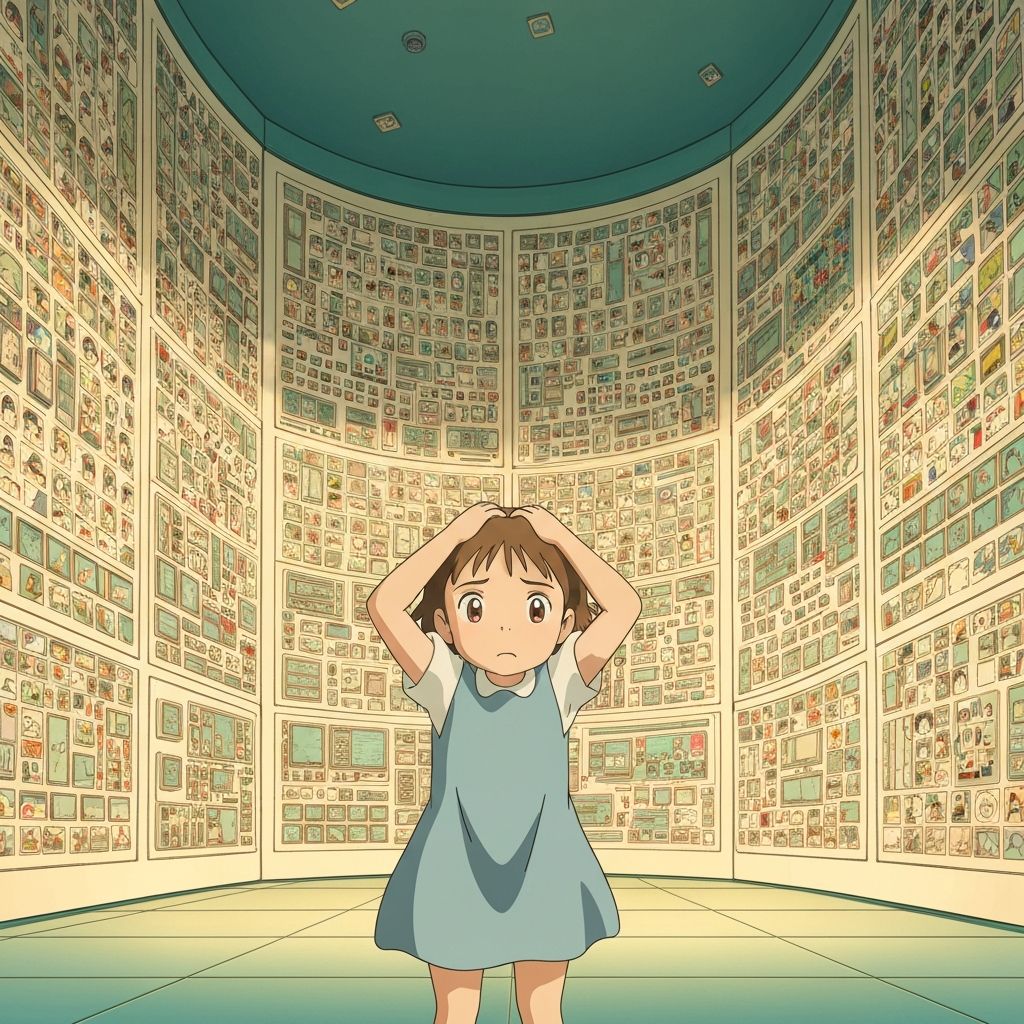

Do the math: it would take over 1,000 years to close that gap through therapy alone. That's not a gap—it's a chasm.

InnerVoice solution: The app itself becomes a modeling partner. Every interaction demonstrates language use. Every button tap shows what words mean in context.